On 29 April 2024, the UK’s Competition and Markets Authority (CMA) published its CMA AI strategic update, the latest in a series of AI-related publications from the department.

It provides a strategic update on the regulator’s approach to AI, including its understanding of AI risks, what it is doing to address these, its AI capabilities, collaboration with others and proposed actions. It will be particularly useful to those seeking to understand the CMA’s areas of focus and the steps it plans to take in the future to regulate AI.

The CMA and AI

As the UK’s principal competition and consumer protection regulator, it is no surprise that the CMA is devoting substantial resources to the field of AI. The CMA sees itself as playing a vital role in ensuring that consumers, businesses and the wider economy receive the benefits offered by AI, while mitigating harm.

The CMA is investing heavily in its capability and skills, with mention in the update that its specialist DaTA unit is now staffed with over 80 people, including data scientists and data engineers, technologists, behavioural scientists, and digital forensics specialists.

Prior to the latest update, AI was considered by the CMA in a range of its publications including:

- 2021: ‘Algorithms: How they can reduce competition and harm consumers’

- 2023: ‘AI Foundation Models: Initial report’

- 2024: ‘AI Foundation Models: Update paper’

Why is AI relevant to competition?

It is widely recognised that AI presents immense opportunities for businesses and consumers but carries risk in several areas. From a competition perspective, there is the potential for AI tools to exacerbate or take advantage of existing problems in the market, or for inequitable access to tools to result in an imbalance in the delivery of AI benefits across society.

The CMA’s update articulates three examples of potential risks to competition:

- Impact on customer choices: the risk that AI systems which underpin consumer choices distort competition by unduly promoting choices which help the platform, to the detriment of others.

- Price setting: the risk that algorithms and AI tools are used to set prices in an anticompetitive way.

- Personalisation: the risk that AI systems may be used to personalise offers, which could include the selective targeting of customers in a way which excludes competitors or new entrants.

With regard to consumers, the CMA identifies several areas of concern including:

- The provision of false and misleading information to consumers, including ‘hallucinations’ or through malicious exploitation of existing unfair practices such as subscription traps, hidden advertising, or fake reviews.

- The targeting of vulnerable consumers via AI-enabled personalisation.

- Difficulties faced by consumers when seeking to identify AI-generated from human-generated content.

- Lack of disclosure as to when a consumer is interacting with an AI tool.

- Undue reliance by consumers on AI-generated information, such as reliance on misleading descriptions of goods or services.

- Insufficient transparency and unclear accountability in the AI value chain, making it difficult for consumers to understand risks and limitations.

Taking aim: large technology companies in the firing line

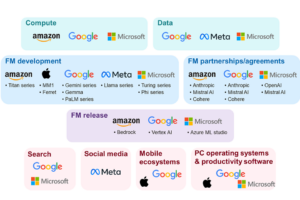

In its update, the CMA refers to a small number of large incumbent technology companies with existing power in the most important digital markets. It states that its “strongest concerns” relate to the fact that those firms “could profoundly shape the development of AI-related markets to the detriment of fair, open and effective competition”, with the outcome being reduced choice and quality and raised prices, to the detriment of consumers.

Noting that some of those firms have strong upstream positions, control over key access points or routes to market, and market power in related digital markets vulnerable to disruption, the CMA states that the “combination could mean that these incumbents have both the ability and incentive to shape the development of [foundation models]-related markets in their own interests, which could allow them both to protect existing market power and to extend it into new areas”.

The power held by some of these companies is summarised in the CMA update as follows:

Image: Figure 2 Examples of GAMMA firms across the AI FM value chain, CMA AI strategic update, 29 April 2024

The risk to competition posed by powerful technology incumbents is covered in more detail by the CMA in its ‘AI Foundation Models: Update paper’ published on 11 April 2024.

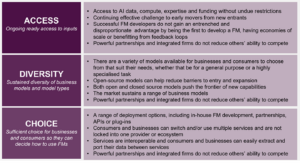

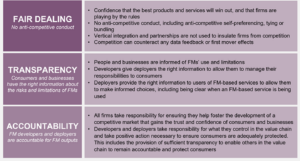

The CMA AI principals: guidance for firms

The CMA has produced a set of six principles and encourages firms to align their practices with these to shape positive market outcomes.

These are, according to the CMA, “… intended to complement the UK Government’s approach and its cross-sectoral AI principles but are focused (per the CMA’s remit) on the development of well-functioning economic markets that work well from a competition and consumer protection perspective”.

Image: Figure 3, CMA AI Principles, CMA AI strategic update, 29 April 2024

The CMA’s next steps

To address the risks it has identified, the CMA states it is taking a range of steps including:

- Public cloud infrastructure services: examining the conditions of competition relating to these services, as part of its ongoing Cloud Market Investigation.

- Monitoring of partnerships: monitoring current and emerging AI-related partnerships, particularly those involving firms in strong positions.

- Merger control: stepping up use of merger control to examine whether partnerships give rise to competition concerns.

- Priority for investigations: evaluating developments in the foundation model (FM) related markets, to inform decisions about future investigations. Areas of focus may include digital activities that are crucial access points or routes to market, such as mobile ecosystems, search, and productivity software.

With the fair access and use of AI one of the greatest technology challenges of recent times, it is positive to see the CMA’s commitment to building its knowledge and acting as a leader in this space.

If you have questions about AI and competition, please contact Suzy Schmitz.

This article is for general information purposes only and does not constitute legal or professional advice. It should not be used as a substitute for legal advice relating to your particular circumstances. Please note that the law may have changed since the date of this article.